Become proactive to avoid security infrastructure problems.

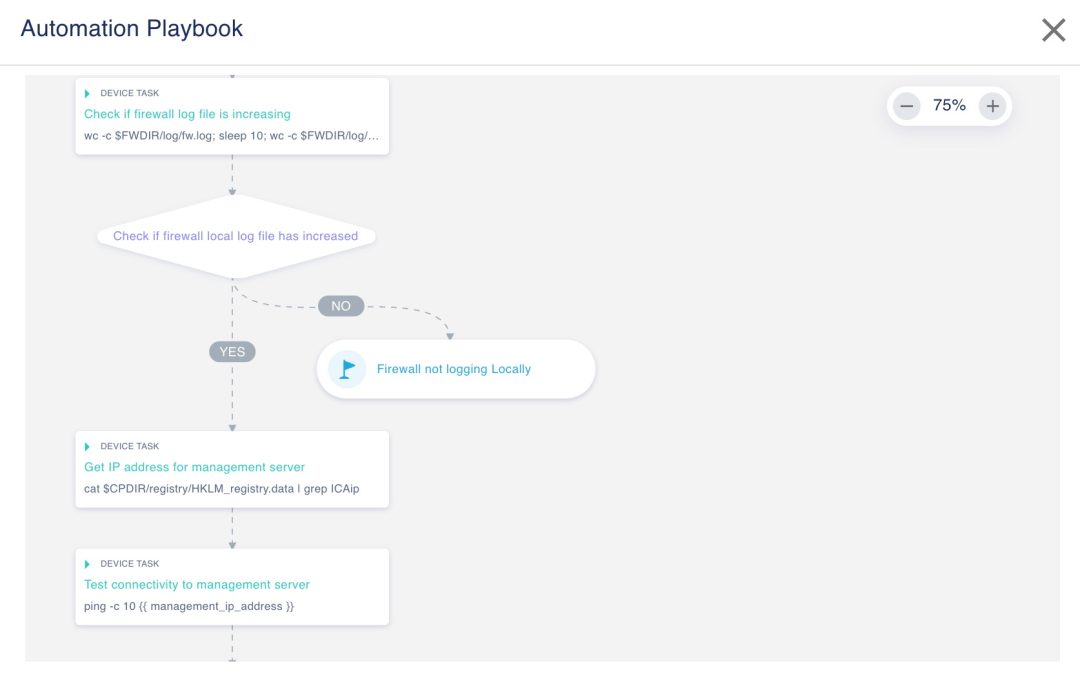

Save time with network automation.

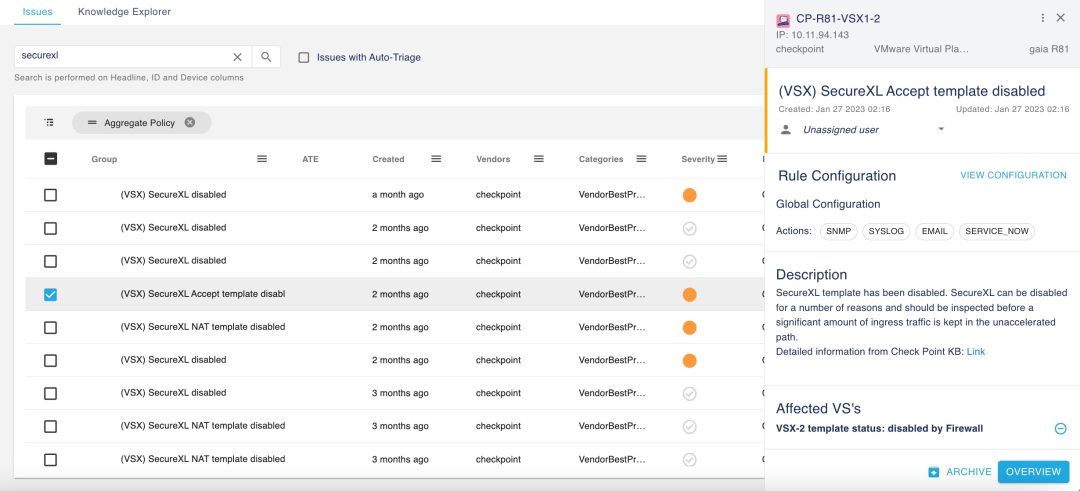

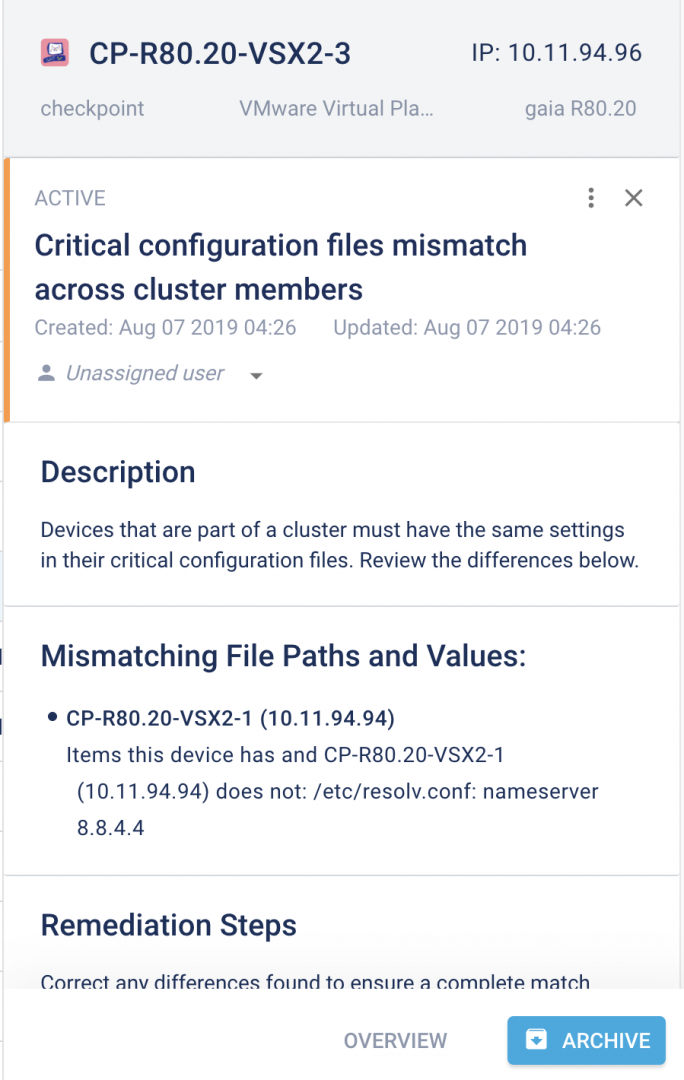

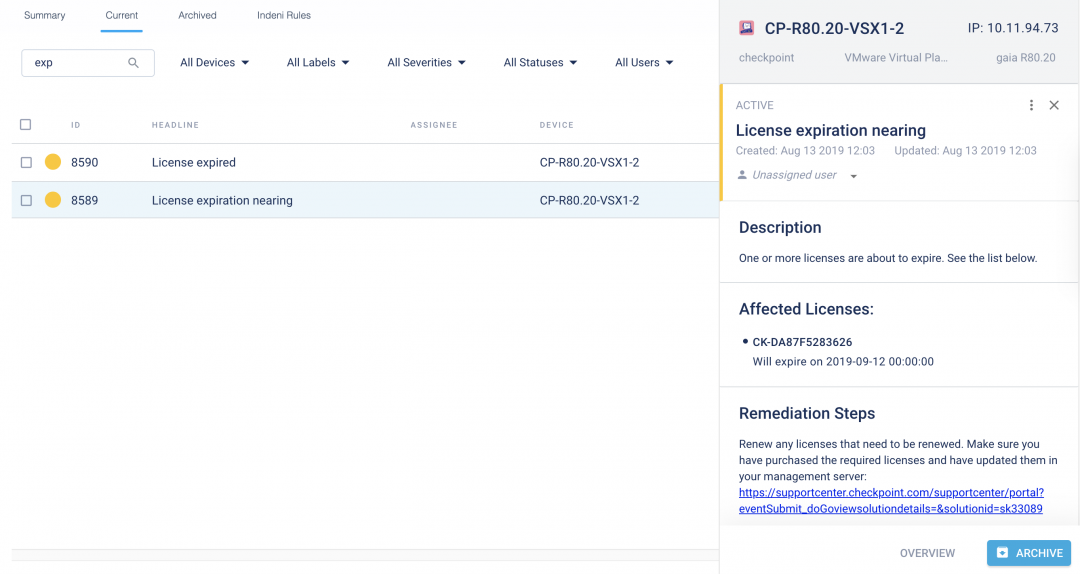

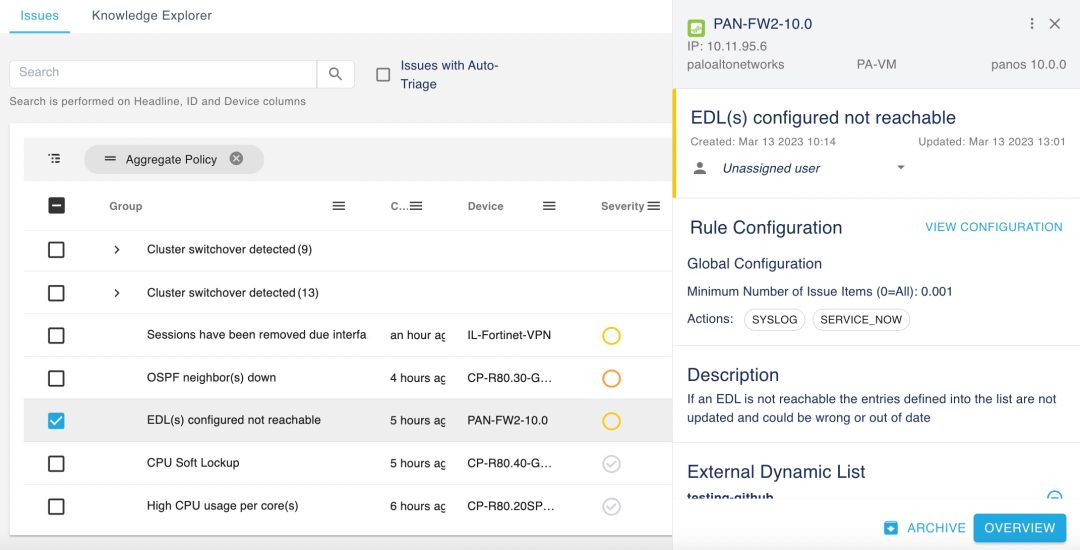

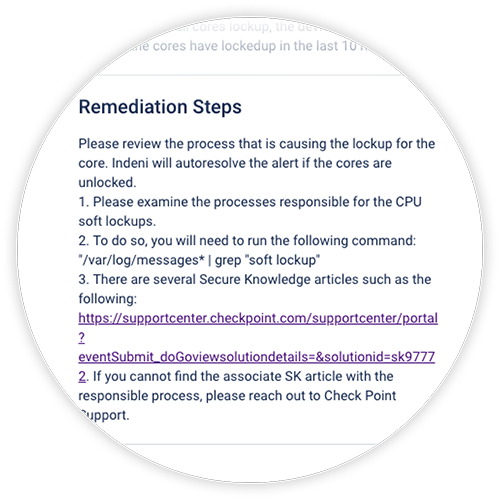

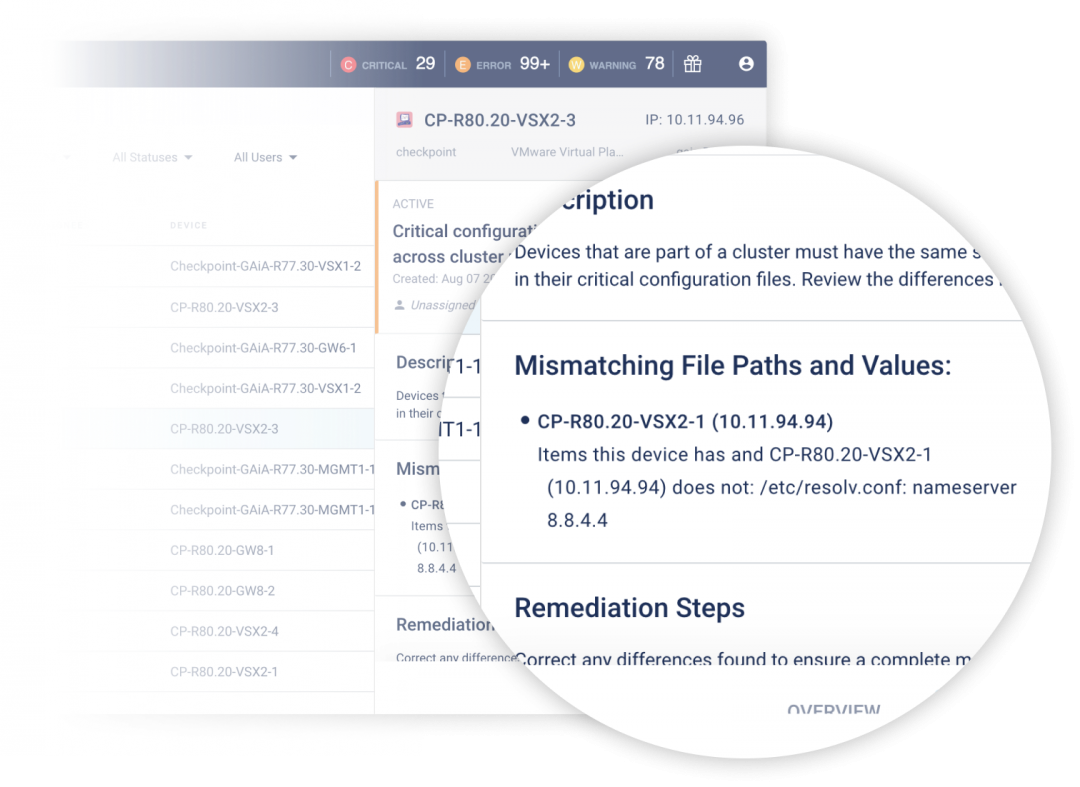

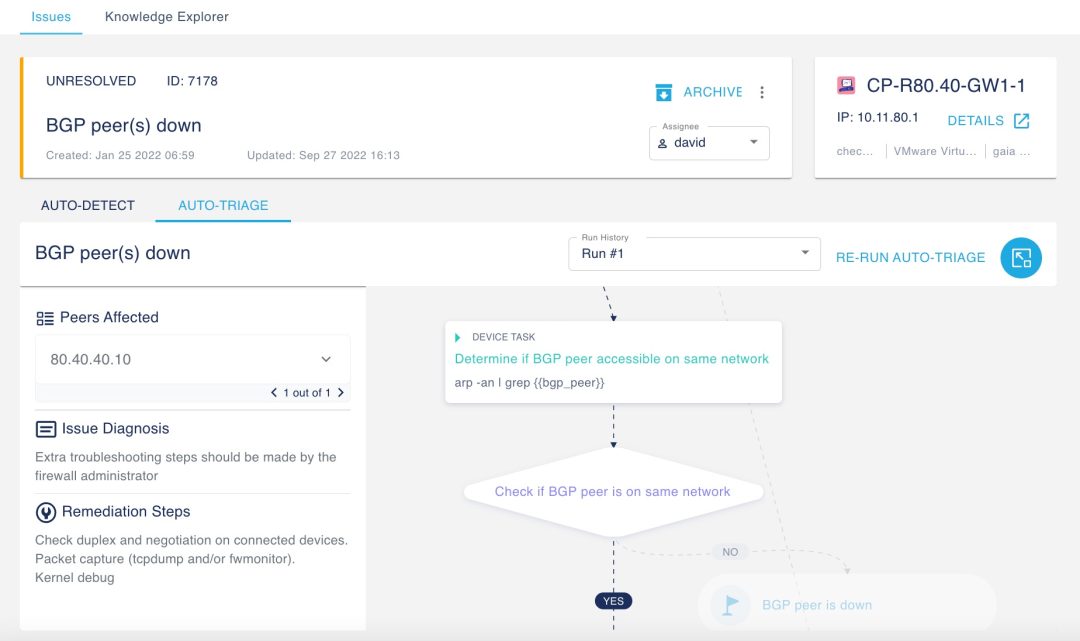

Diagnose issues automatically.

View Video

Infrastructure Automation Day 2 Tasks

Indeni curates vetted, community-sourced experience into certified, production-ready automation elements for unprecedented visibility and agility of security infrastructure operations.

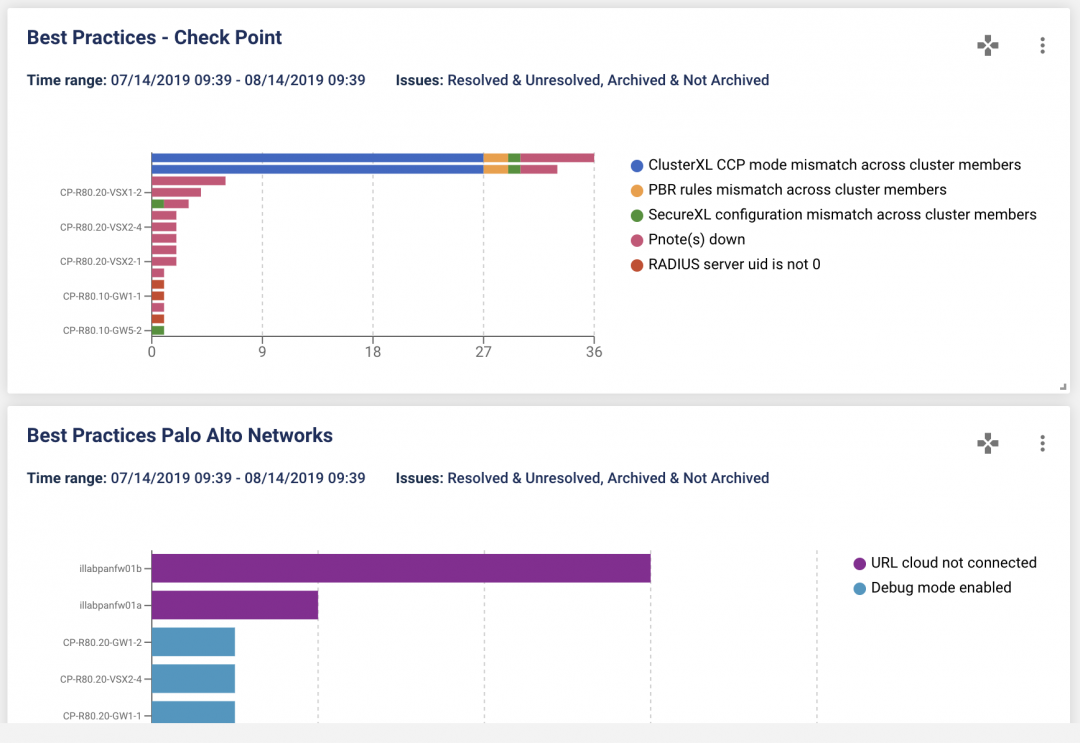

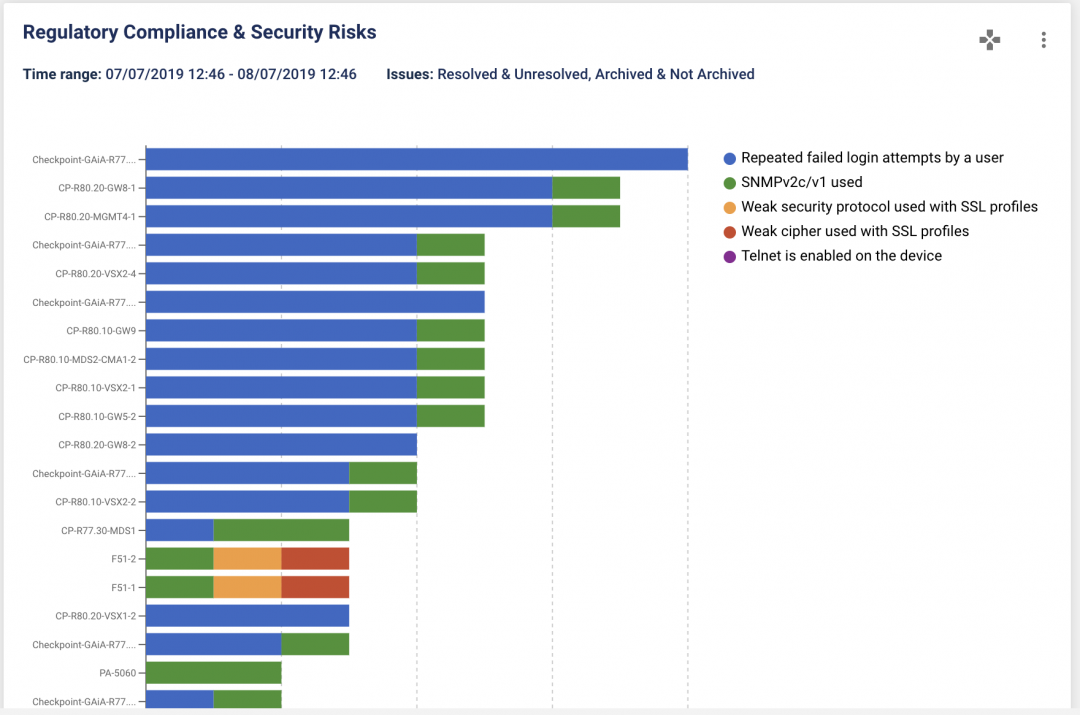

Use Cases